Comparing Classification Models - You’re Probably Doing It Wrong

In my last post, I discussed benchmark datasets for machine learning (ML) in drug discovery and several flaws in widely used datasets. In this installment, I’d like to focus on how methods are compared. Every year, dozens, if not hundreds, of papers present comparisons of ML methods or molecular representations. These papers typically conclude that one approach is superior to several others for a specific task. Unfortunately, in most cases, the conclusions presented in these papers are not supported by any statistical analysis. I thought providing an example demonstrating some common mistakes and recommending a few best practices would be helpful. In this post, I’ll focus on classification models. In a subsequent post, I’ll present a similar example comparing regression models. The Jupyter notebook accompanying this post provides all the code for the examples and plots below. If you’re interested in the short version, check out the Jupyter notebook. If you want to know what’s going on under the hood, please read on.

An Example

As an example, we’ll use the dataset presented in a recent paper by Diwan, AbdulHameed, Liu, and Wallqvist in ACS Omega. In this paper, the authors used a few different ML approaches to build models for bile salt export pump (BSEP) inhibition. They concluded that multi-task machine learning provides a superior model. To reproduce the published work, I built machine learning models using the dataset the authors provided in their GitHub repository. In the example presented below, I compared three different ML approaches.

- LightGBM (LGBM) with RDKit Morgan fingerprints and a path length of 2, sometimes called ECFP4. This is the “conventional ML” baseline.

- A single task message passing neural network (MPNN) as implemented in ChemProp (CP_ST). This provides an example of single-task deep learning.

- A multi-task MPNN as implemented in ChemProp (CP_MT), which acts as the multi-task deep learning method.

I performed 10 folds of cross-validation where all models were trained on the same cross-validation splits. I did two sets of comparisons, one using a random split and the other using a scaffold split. Note that I used a relatively simple cross-validation strategy here. For more sophisticated approaches, please see this post. The code used to build the models, and the results from the cross-validation folds are available in the GitHub repository associated with this post. To evaluate the performance of the models, I applied three widely used performance metrics.

There are differing degrees of religious fervor around which metrics are most important. In the interest of completeness, I’ve included all three.

The (Wrong) Way Methods are Typically Compared

Most papers in our field present the comparisons of classification models in one of two ways. The first is what I refer to as “the dreaded bold table”. Many authors simply report a table showing the average for each metric over N-folds of cross-validation. The method with the highest value for a particular metric is shown in bold. <sarcasm> The table below shows us that the multi-task ChemProp (CP_MT) model outperforms the other two models because it’s the “winner” on two of three metrics, right? </sarcasm>

The other common approach to comparing classification models is to present a “dynamite plot” showing the mean metric value for the cross-validation folds as a bar plot with error bars showing the standard deviation over the cross-validation folds. Papers rarely discuss the standard deviation or the significance of the differences between the cross-validation averages.

Why Is This Wrong?

When comparing metrics calculated from cross-validation folds for an ML model, we’re comparing distributions. When we compare distributions, we need to look at more than just the mean. If we look at the two distributions below, it seems intuitively obvious that the distributions on the left are different, while the distributions on the right are similar.

A common way of determining whether two distributions are different is to look at the overlap of the error bars in a dynamite plot, like the one shown in the previous section. Unfortunately, this approach is incorrect. It’s important to remember that the standard deviation is not a statistical test. It’s a measure of variability. The "rule" that non-overlapping error bars mean that the difference is statistically significant is a myth. This rule doesn't apply to any conventional type of error bar. For a detailed discussion of the incorrect “non-overlap of error bars” rule, see this paper by Anthony Nicholls.

Statistical Comparisons of Distributions

Fortunately, statisticians have spent the last 150 years developing tests to determine whether the arithmetic means of two distributions differ. The most widely known of these tests is Student’s t-test, which we can use to compare the means of two distributions and estimate the probability that the difference could have arisen through random chance. One output of the t-test is a p-value, which indicates this probability. Typically, a p-value less than 0.05, indicating a random probability of less than 5%, is considered significant. While the t-test is an essential tool, it has some limitations. First, the distributions being compared should be normally distributed. When we deal with a small number of folds from cross-validation, it’s difficult to determine that our calculated metrics are normally distributed. Second, the distributions need to be independent. While the training and test sets are independent over a single cross-validation fold, samples are reused over subsequent folds. Third, the distributions should have equal variance. Some have argued that the magnitude of this variance is diminished over cross-validation folds.

An alternative approach that overcomes these limitations is to use a non-parametric statistical test. Non-parametric tests, which typically operate on rank orders rather than the values themselves, tend to be less powerful than analogous parametric tests but have fewer limitations on their application. Most parametric statistical tests have non-parametric equivalents. For instance, the Wilcoxon Rank Sum test is the non-parametric analog for Student’s t-test.

This all sounds great, but I tend to learn from examples. Let’s compare ROC AUC from the three methods listed above on the BSEP dataset. We’ll start by comparing pairs of methods.

- CP_ST vs LGBM

- CP_MT vs LGBM

- CP_MT vs CP_ST

The plots below compare the distributions of ROC AUC for the method pairs. Above each plot, we have the p-values from Student's t-test and the Wilcoxon rank sum test. Examining the figure on the left, which compares the single-task and multitask ChemProp AUCs, we see the two distributions overlap, and the p-values from the parametric and non-parametric tests are nowhere near the cutoff of 0.05. One would be hard-pressed to say that multi-task learning outperforms single-task learning in this case. In the center plot, we see a clear difference between the distributions, and both the parametric and non-parametric p-values are below 0.05. In the plot on the right, the parametric p-value is slightly over 0.05, and the non-parametric value is slightly below.

While plotting the kernel density estimates as we did above is informative, I prefer to look at this sort of data as boxplots. The plots below show the distributions above as boxplots. For me, this provides a clearer view of the differences in the distributions. The boxplots make it easier to see the distribution median, where most of the data lies, and where the outliers are.

Comparing More Than Two Distributions

There’s another aspect we need to consider when comparing the above distributions. The statistical tests we’ve discussed so far are designed to compare two distributions. As we add more methods, the chances of one method randomly being better than another increases. The ANOVA, or analysis of variance, provides a means of comparing more than two methods. While the ANOVA is a powerful technique, it suffers from some of the same limitations as the other parametric statistical methods described above. Friedman’s test, a non-parametric alternative to the ANOVA, can be used in cases where the data is not independent or normally distributed. Friedman’s test begins by reducing the data to rank orders. In this example, we will consider the same comparison of AUC for 3 methods. We start by rank-ordering the methods across 10 folds of cross-validation. In the figure below, each row represents a cross-validation fold, and each column represents a method. A cursory examination shows that multi-task ChemProp (CP_MT) was ranked first 4 times, single-task ChemProp (CP_ST) was ranked first 5 times, and LightGBM (LGBM) was ranked first only once.

We then calculate the rank sums for each column and see that ChemProp single-task has the lowest rank sum.

The values from this analysis can then be plugged into the formula below to calculate the Friedman χ2.

Where N is the number of cross-validation folds, k is the number of methods, and R is the rank sums.

I don’t typically think in terms of χ2 values. To develop some intuition for this, I plotted χ2 vs p.

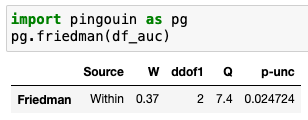

Of course, we don’t have to jump through these hoops to perform Friedman’s test. There are implementations in pingouin, scipy, and several other Python libraries. Here’s an example with pingouin.

Here’s the same example with scipy (and far too many decimal places).

Using Friedman’s Test to Compare Models of the BSEP Dataset

Now that we have the preliminaries out of the way. Let’s apply Friedman’s test to the BSEP dataset we discussed above. The figure below shows one way I like to compare methods. The code used to generate this figure is in the Jupyter notebook associated with this post. Boxplots show comparisons of the three methods with ROC AUC, PR AUC, and MCC over 10 cross-validation folds. Above each plot, we have the p-value from Friedman’s test. In this example we randomly split the data into training and test sets. While we see a significant p-value for ROC AUC, the p-values for PR AUC and MCC are not significant. Is one method superior to another? Given this analysis, it’s hard to say.

If we look at the results below, where we used scaffold splits for cross-validation, we see a similar trend. There is a statistically significant p-value from Friedman’s test for ROC AUC, but the p-values for PR AUC and MCC are not significant.

Post-hoc Tests

At this point, the astute reader may have noticed that Friedman’s test tells us if a difference exists between methods but doesn’t tell us where this difference is. Once we’ve used Friedman’s test to confirm a difference between methods, we can use a post-hoc test to determine where the differences are. Post-hoc tests are just pairwise statistical tests of the individual methods, with one crucial difference. As mentioned above, when we compare more than two methods, there is a higher likelihood of one method being better by chance. To deal with this, we typically correct the threshold p-value for significance. One of the most straightforward approaches to adjusting the threshold for significance is the Bonferroni correction. With this correction, the threshold for significance is divided by n, where n is the number of methods being compared. For instance, if our threshold for significance is 0.05 and we are comparing 3 methods, the threshold becomes 0.05/3 or 0.017. Some argue this is too harsh, and several alternatives have been proposed. You might be thinking, “If we have post-hoc tests, why do we need to perform Friedman’s test?”. Remember that post-hoc tests compare pairs of methods, while Friedman’s test compares all. It’s crucial to perform both tests.

Let’s take a look at an example. In the plots below, we use the pairwise Conover-Friedman test implemented in the scikit-posthocs package to perform the post-hoc tests. In the interest of brevity, we’ll only consider the scaffold split example. In these “sign plots”, each cell shows the significance of the corrected p-value representing the difference between the two methods indicated in the rows and columns. The diagonals, which represent a comparison of the method with itself, are white. The color in the cells represents the degree of statistical significance. The corrected p-values greater than 0.05 (NS = not significant) are colored pink. Significant p-values are shown in progressively darker shades of green. As expected based on Friedman’s test, we see a difference between LGBM and the other methods for ROC AUC. None of the other comparisons was significant.

Another tool for comparing multiple methods is the Critical Difference Diagram (CDD), which is also available in scikit-posthocs. In the CDD, if two methods are connected by a horizontal line, there is no significant difference between the distributions. The position of a vertical line in the CDD represents the mean rank of a method over cross-validation folds. In the top plot in the figure below, we have a CDD for ROC AUC. This plot shows that LGBM has a low mean rank of 0.4, and there is no horizontal line between LGBM and the other two methods. This indicates the difference between LGBM and the two ChemProp variants is significant. For CP_ST and CP_MT, we see similar average ranks and a horizontal line between the vertical lines, indicating that the difference between methods is not significant. In the two lower plots, we see a black horizontal line connecting all three vertical lines. This indicates that there is not a significant difference between methods. As with the analysis above, it’s tough to say that any of the three methods outperforms the others.

Conclusions

I realize the title of this post is provocative and may have come across as arrogant. I'm not the ultimate expert on statistics, and there may be better ways of comparing methods than what I've outlined here. However, we need to do something to improve the state of our field, and we need to do it now. Most papers published today use highly flawed datasets and completely ignore statistical analyses. This needs to stop. I think the best way to change the current situation is to provide clear guidelines on best practices. These guidelines can then be adopted by authors, reviewers, and editors. I’m working with a few friends to assemble a first draft, which we hope to publish as a preprint before the end of this year. If you’d like to help, please let me know.

While I whole-heartedly agree with your overall premise, my question with the statistical approach of considering metrics over the CV folds is that your choice of N (err, K) is always arbitrary. If you were to perform LOOCV, then you'd have a far larger N and thus (artificially) more power for a test (t-test, ANOVA, or otherwise) to call significance. I think that a repeated CV performed over many hundreds (thousands) of randomized CV partitions, aggregated for each random partition, gives a different, and perhaps more useful, empirical distribution of accuracy results (MCC, AUROC, otherwise), from which you can directly read off expected frequencies of better/worse performance (without regards to parametric assumptions).

ReplyDeleteThis is an interesting idea and something I need to play around with. I agree that the choice of N (or K) folds is arbitrary. However, in many cases, hundreds of CV folds is impractical.

DeleteI believe the number of folds becomes a crucial consideration when the number of models we are comparing is large. In particular, suppose we have 5 folds, but we're training a model for each one of 1000 hyperparameter configurations. Depending on the dataset noise, it's not unlikely that we will have some configurations completely outperforming a baseline purely by chance on all folds. On the other hand, if we're just comparing three models as above, five folds is completely sufficient.

DeleteI haven't got into the maths yet, but it should be straightforward to get an analytical estimate for P("k-fold hypothesis test is passed by chance" | number of models) if we have a measure of how variable the evaluation metric is. That could be used to find a k such that it's e.g. <1% that some model passes the test by chance

If you have a formula for determining the optimal value of k, I'd love to see it. Btw, do you find that you get significant improvements in performance by trying 1000 hyperparameter combinations?

DeleteActually, I would probably re-phrase it as "we can use the number of folds (e.g. 5 or 10) to assess what kind of a hyperparameter space size is reasonable to explore". I agree that 1000 combinations is not going to give you good improvements -- and part of it is that it becomes harder to tell whether it's a genuine improvement or a spurious one.

DeleteSimplest form of the argument:

DeleteIf we're looking for an improvement based on a hypothesis test with p<0.01, but try out 100 models with independent noise, there's a 1 - (0.99)^100 ~= 0.63 chance at least one will pass the test.

Increasing the number of folds would make it less likely for "identical performance models" to get lucky like this (although in the real world with real data, increasing k will generally decrease all p-values, and instead the problem will be that the hypothesis test passes but the effect size is very small -- a whole other rabbit hole...)

One potential issue with lots of CV folds is time. Running 10-folds of cross validation for an ensemble ChemProp model for the BSEP dataset in this post took about a day. Increasing this by 5 to 10x is going to be prohibitive. I guess we could throw more compute at the problem but that becomes out of reach for some people.

DeleteWhen comparing distributions, I like to use the Kolmogorov-Smirnov test. Because it's idea is very simple and the name sounds like two chess champions. :-)

ReplyDeleteI hadn't thought about it that way, but I guess you could express the data as an ECDF and use the KS test. I'm not sure the results would be different.

DeleteGreat post!

ReplyDeleteI'm a big fan of the student's paired t-test in particular. I see you touched that area by ranking the models in every single fold for the Friedman's test. What do you think about doing pairwise model A and model B comparisons by looking at the pairs of their performances (or, equivalently, the difference between their performances) on every single fold? This allows for (a) paired student's t-test to check whether the performances come from the same distribution; (b) computing Cohen's d to get a sense of how confidently one model is better than another. As a bonus, we can forget the maths and qualitatively compare models with a simple scatter plot, each point corresponding to a fold, where points above line = model B is better, below = model A.

In theory, you can have the ROC-AUC distributions be very overlapping, but have model A better than model B in every single fold, so this kind of analysis might shine more light on the comparison as long as we have paired evaluation data (and with CV we do, of course)

My apologies, I can see you make use of the paired nature of the CV data with the Conover-Friedman test; however, I'm still curious about your thoughts around the pairwise t-test VS such non-parametric methods, as I find looking at the exact differences to be slightly more intuitive!

DeleteA rule of thumb I heard in grad school: use the second-best performing model from a paper. The problem being information leakage from multiple evaluations against the test set unintentionally influencing model design.

ReplyDeleteDoes adding a p-value to model comparisons give a false sense of confidence?

Any suggestions on how to avoid leakage? Perhaps the p-value gives and inflated confidence. There are a lot of ways to make these comparisons. At the end of the day, I'd like the field to agree to use something beyond a bold table and a bar plot.

DeleteIn other ML domains, ImageNet and CASP have relied on private validation/test sets in competitions but even these can be fraught: https://www.technologyreview.com/2015/06/04/72951/why-and-how-baidu-cheated-an-artificial-intelligence-test/

DeleteOtherwise, information leakage and inflated model performance/p-values are always a risk. Practically, evaluating against a fixed set of benchmarks/tasks - rather than an author-selected subset - may curb overfitting.

Thanks for all your writing here!

The SAMPL challenges have had a few smaller datasets that looked a LogP and pKa. A blind challenge for ADME properties would be pretty expensive. For the field to advance, I think we need some good standard datasets and solid statistical metrics for method comparison

DeleteCongrats for another excellent post. I had been reading on this topic after seeing your previous blog post/Notebook. Regarding tests, Dietterich also recommends the 5x2-fold cross-validation paired t-test (available in mlextend). Alpaydin suggests a 5×2cv F-test, Bouckaert a 10×10cv with calibrated degrees of freedom and corrected variance (as proposed by Nadeau and Bengio). All of these have the same problem (?): using repeated sampling with cheminformatics data might not be the best approach. From Classifier Technology and the Illusion of Progress: "Intrinsic to the classical supervised classification paradigm is the assumption that the data in the design set are randomly drawn from the same distribution as the points to be classified in the future". In the end, I concluded that choosing a model that performs well (could even be "the best" from the dreaded bold table) and comparing it to a baseline model using McNemar's test would be enough to justify its choice (+ defining the applicability domain). If you would like to use this material I collected in a preprint, please let me know how I can share. It will be better than keeping it in a Notebook that I'll never get to finish!

ReplyDeleteThanks! I wrote about McNemar's and 5x2 in a 2020 post.

Deletehttps://practicalcheminformatics.blogspot.com/2020/05/some-thoughts-on-comparing.html. I realize that we live in a world of non-IID data, but I'm not sure what to do about it. I'd love to see what you've written. Can you send me a DM on LinkedIn or Twitter (oops X) with your contact info?

Hi Pat, when assessing ML models you do need to be able to distinguish bad models from good models being used outside their applicability domains. As I’ve said before, genuinely categorical data are rare in drug discovery and there may be scope for using the continuous data from which the categorical data have been derived for assessment of model quality. For example, high sensitivity of model quality to threshold values used to categorize continuous data should ring alarm bells. Also, misclassification is a bigger error when the data value is a long way from a class boundary than when it is close to the class boundary. Compounds are classified as bile salt export pump (BSEP) inhibitors or noninhibitors by Diwan, AbdulHameed, Liu, and Wallqvist. Can all the approaches that you’re recommending easily be applied when there are more than two classes or when the classes are ‘ordered’ (i.e., high, medium and low)?

ReplyDeletePK, you seem to be repeating yourself on multiple platforms. Please see my responses on LinkedIn.

DeleteThis comment has been removed by a blog administrator.

ReplyDeleteHi Pat

ReplyDeleteI share your frustration with the way that methods are compared in the literature, and I don't disagree with many of your comments and suggested methods. However, I have different problems with the way methods are evaluated.

My first beef is a really simple one, and that is that the evaluations do not take into account the typical errors in the measurements to which they're being applied. If the experimental measurements have a limited ability predict themselves (that is, experiments on day 1 are only correlated to experiments on day 2 with a Pearson or Spearman correlation of 0.85), how can the models do better than that, and how can someone claim to have one model that beats another by less than the error defined by the degree of correlation? An obvious example is where the experimental results have (or should have) no more than 2 significant figures, but the model accuracies are quoted to 4 or 5 significant figures. The machine learning data scientists seem to be guilty of this more than people in industry, but not by much. Drives me crazy. Note that being aware of this would also make most differences between the methods largely vanish, which is consistent with what I've experienced in the real world.

My second problem is with the basic definition of what is good. We want a useful model. The methods are almost always evaluated without a possible context for how they're going to be used. Probably the most common use case for models in drug discovery is virtual screening, more specifically the application of a model to a large set of available compounds in order to pick a subset of a larger set for screening. In this context, the overall accuracy of the model is not what makes it useful. What we're concerned about, in this situation, is maximizing the probability of picking an active, given a positive prediction. So, we want to maximize the true positive rate and minimize the false positive rate. Losing positives (i.e. having lots of false negatives) is (usually) irrelevant. That renders many commonly-used measures of goodness irrelevant in terms of real world evaluation.

Sorry, "that is, experiments on day 1" should read "for example, experiments on day 1".

DeleteThanks for the feedback Lee. To point #1, it's straightforward to calculate the maximum correlation one can expect given experimental error. I wrote about this in 2019. https://practicalcheminformatics.blogspot.com/2019/07/how-good-could-should-my-models-be.html. To your second point, AUPRC does a decent job of balancing true and false positives for sparse datasets.

DeletePat -

DeleteI was proposing that differences between models may be trivial within the context of experimental variability, even if one model is statistically different to the another considered in isolation. Do you agree?

On the second point, your suggestion to use the PR AUC curve is a good one, but I would contend just using PPV for the comparison of models for most virtual screens is a better measure.

I see what you mean. It should be easy enough to simulate this. From a prospective standpoint, we definitely see a difference in between model types.

DeleteFor PR AUC vs PPV. The PR curve gives a lot more information than a single value,, why not use it?

The recent paper from Cheng Fang and colleagues at Biogen also saw differences in prospective predictions. https://pubs.acs.org/doi/10.1021/acs.jcim.3c00160

DeleteOn PR AUC, the x-axis is recall. For the virtual screening example I gave, if you don't care about losing potential actives, then understanding PPV as a function of recall is irrelevant. The only thing that matters is the overall PPV. In other situations, understanding the dropoff of PPV is intellectually seductive, although prospectively you would never know where that dropoff occurs for your unknown set. PPV can be 1 for a model that misses half the actives, but PR AUC will penalize that model if the missing actives are further down the ranked list.

DeleteOn the Biogen paper, I like the time slicing, but how would they know that the differences between the models is real, given that they don't describe the experimental variation of the measurements? (OK, I'll answer my own question. In this case, the fact that some models outperform others consistently over time is a good hint).

Thanks Pat for raising this important topic. I hope this discussion brings us closer to clearer guidelines.

ReplyDeleteA good recent paper relevant to the metrics across CV folds discussion is here: https://arxiv.org/pdf/2104.00673.pdf. I highly recommend reading this if you are interested in this topic.

The authors explain how there is high correlation between performance across CV folds which leads to underestimation of variance. This results in inflated type I error rate for statistical tests, and overestimation of confidence, which is a concern for your example. Some people have argued that the uncertainty quantification is better than nothing, but I think we should try to suggest methods that address this overconfidence.

The authors also provide a good overview of methods others mentioned and their limitations. McNemar and Dietrech’s 5X2 have good type I error control but they are very conservative. So you wont be overconfident but you will likely miss meaningful differences. I did some simulations in my dissertation that showed good performance for both.

The most principled approach I have seen is presented in that paper. But it is only available as an R package and it involves nested CV so it might be computationally expensive. McNemar and Dietrech’s 5X2 are good options if you are in python because of mlextend. If you are doing virtual screening we published a method with better power than McNemar and way better control for multiple testing over Bonferonni for a hit enrichment curve: https://jcheminf.biomedcentral.com/articles/10.1186/s13321-022-00629-0

You always need to consider applicability domain of the model as others have mentioned. Results will change dramatically when you venture beyond that as people often do.

Thanks, Jeremy! I need to make some additional comparisons.

DeleteHi Pat, this is a very interesting articles.

ReplyDeleteI'm also checking other ones.

Thank you for sharing your knowledge

Johnny H ;)

Hi Pat, An excellent post as always!

ReplyDeleteThe discussion in the comments is particularly thought-provoking.

There is a convergent evolution of ideas in ML where we solve the same problems separately on our own so your post and the idea of creating some guidelines are extremely helpful in reducing wasted time!

For completeness I thought I'd mention the paper by Demsar 2006 which I have used as a source of inspiration and the subsequent paper by Garcia, 2008 is also worth a look. These papers are not mentioned above but could be of interest to your readers. Their approaches are consistent with the ideas expressed in your comments above.

Cheers,

Demšar, J. 2006. 'Statistical comparisons of classifiers over multiple data sets. ', Journal of Machine Learning Research, 7: 1-30.

Garcia, S., and Francisco Herrera. 2008. 'An Extension on “Statistical Comparisons of Classifiers over Multiple Data Sets” for all Pairwise Comparisons', Journal of Machine Learning Research, 9: 2677 - 94.

Nice study and comparison, highlighting a very important topic, that nowadays people don't use to compare the models. Great comment also from Dr. Slade Matthews mentioning two very nice old papers on the topic. We use this approach in 2011 to compare several classifiers in a cheminformatics classification study following the same ideas. Le-Thi-Thu, H. 2011. 'A comparative study of nonlinear machine learning for the "in silico" depiction of tyrosinase inhibitory activity from molecular structure.' Molecular Informatics,30, 6-7, 527 - 537.

ReplyDelete