Where's the code?

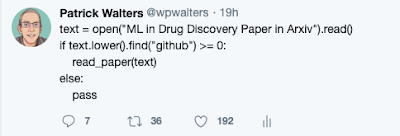

Yesterday I posted this tweet, which seemed to resonate with quite a few people. Ok, at least it got more likes than my tweets usually do. Of course, while writing snarky tweets is fun, it doesn’t do anything to address the underlying problem. Computational Chemistry and Cheminformatics (including applications of machine learning in drug discovery) are a few of the only remaining fields where publishing computational methods without source code is still deemed acceptable. I spend a lot of time reading the literature and am constantly frustrated by how difficult it is to reproduce published work. Given the ready availability of repositories for code and data, as well as tools like Jupyter notebooks that make it easy to share computational workflows, this shouldn’t be the case. I really liked this tweet from Egon Willinghagen, one of the editors of The Journal of Cheminformatics (J. ChemInf.).

Shouldn’t we be able to download the code and data used to generate a paper and quickly reproduce the results? Even better, we should be able to try the code on our own data and determine whether the method is generally applicable. When code is available I do this and try to provide feedback to the authors on areas where the method or the paper could be improved.

High impact journals such as Science and Nature are now mandating the inclusion of source code with computational methods. Here’s the wording from the editorial policy at Science.

We require that all computer code used for modeling and/or data analysis that is not commercially available be deposited in a publicly accessible repository upon publication. In rare exceptional cases where security concerns or competing commercial interests pose a conflict, code-sharing arrangements that still facilitate reproduction of the work should be discussed with your Editor no later than the revision stage.

Nature is a bit less prescriptive, but still strongly urges the release of source code.

Upon publication, Nature Research Journals consider it best practice to release custom computer code in a way that allows readers to repeat the published results. We also strongly encourage authors to deposit and manage their source code revisions in an established software version control repository, such as GitHub, and to use a DOI-minting repository to uniquely identify the code and provide details of its license of use. We strongly encourage the use of a license approved by the open source initiative. The license of use and any restrictions must be described in the Code Availability statement.

At least one journal in our field is making an effort to ensure that the methods described in papers can be easily reproduced. J. ChemInf. has the following as part of its editorial policy.

If published, the software application/tool should be readily available to any scientist wishing to use it for non-commercial purposes, without restrictions (such as the need for a material transfer agreement). If the implementation is not made freely available, then the manuscript should focus clearly on the development of the underlying method and not discuss the tool in any detail.

Each J. ChemInf. paper also includes a section entitled “Availability of data and materials”, which specifically lists the availability of the code and data used in the paper. The editors of J. ChemInf. do a reasonably good job of enforcing this policy, although the occasional paper slips through without code, or with vague references to the data used in the paper. As far as I can tell, the other prominent Cheminformatics journals

- The Journal of Chemical Information and Modeling

- The Journal of Computer-Aided Molecular Design

- Molecular Informatics

- The Journal of Molecular Graphics and Modeling

What can we do about this?

- Pressure the journals in our field to create editorial policies that enforce the reproducibility of computational methods. Write to the editors of the journals that you read regularly and let them know that reproducibility is important to you.

- As reviewers, either reject papers which aren’t reproducible or, at the very least, strongly suggest that the authors release their code with the paper. I’ve done this several times, and in many cases, the authors have released their code.

- Release code with your papers! If enough people do this, it will become the norm. Ultimately we need a cultural shift so that we can reach a point where publishing a paper without code is deemed socially unacceptable. At one point in time, it was socially acceptable to smoke in public. Finally, enough people got fed up and cultural norms shifted.

This change has already occurred in other fields. In the 1990s, one could publish a protein crystal structure without depositing the coordinates in the PDB. I had a colleague who wrote software to extract 3D coordinates from stereo images in journals. Editorial policies changed and now no one would think of publishing a crystal structure without prior deposition of coordinates. Not too many years ago, it was possible to publish medicinal chemistry papers that were based on unreleased proprietary data. Due to changes in the editorial policy at the Journal of Medicinal Chemistry, this is no longer acceptable. This insistence on scientific rigor did not diminish the quality of the papers or the number of papers published. To the contrary, it made the fields stronger and made it easier to build upon the work of others.

I’ve tilted at this windmill before. In 2013, I wrote a perspective in the Journal of Chemical Information and Modeling that called for new standards for reproducibility of computational methods. As I said in a recent talk, this was the equivalent of digging a hole and yelling into it. It may have made me feel better for a few minutes, but it had no impact whatsoever. Am I missing something here? Are there legitimate reasons to not release code with a paper? Are there other ways that we can encourage people to include code with their papers? I’d appreciate any comments on how we can improve the current situation.

Comments

Post a Comment