My Science/Programming Journey

Note: This post is purely self-indulgent and probably won't be interesting to anyone who is not me. You have been warned.

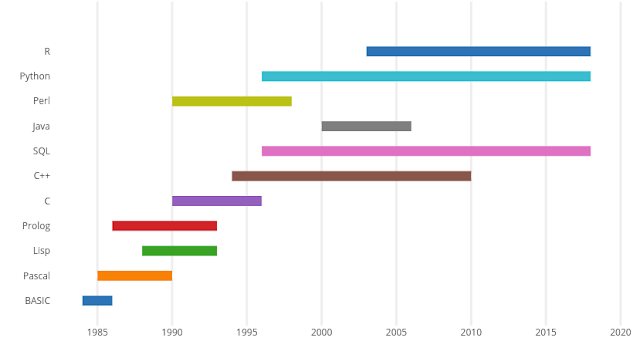

A few recent tweets on programming languages got me thinking about my scientific/programming journey. A long time ago in a galaxy far away ...

Phase I Varian/Analytichem 1984-1990

When I graduated from college in 1984, I got my first full-time job in science. I was hired as the head of manufacturing chemistry at a small company called Analytichem International in Harbor City California. Prior to this, while I was an undergrad at UCSB, I worked part-time for a company called Petrarch Systems synthesizing siloxane polymers for, among other things, medical devices and gas chromatography columns. This experience doing siloxane chemistry was the reason I got the job at Analytichem, where they were doing similar chemistry on surfaces. I started work at Analytichem with the intent of having a career as a polymer chemist. I'd had very little experience with computers and certainly didn't see a future as a computer geek. One of my responsibilities at Analytichem was the manufacture of HPLC columns. This involved a bit of synthetic chemistry to create the bonded silica stationary phase. Following the synthesis of the bonded silica, we then used a system of pumps, valves, and other hardware to pack the stationary phase into the HPLC column. After the columns were packed, they were tested by injecting mixtures of chemical compounds.The testing process was labor-intensive and time-consuming. It required making injections into an HPLC, manually measuring chromatograms (with a ruler) and generating reports that were shipped with the columns. I figured that there had to be a way to automate some of this work. Automation had the potential to dramatically impact our productivity, and more importantly, free me from some tedious, boring work. I did some research and fortunately, my boss, Mario Pugliese, allowed me to purchase an autosampler, a switching valve, a computer, and some data analysis software. The software, from Nelson Analytical, did some of what we wanted to do but was missing a few key components necessary for our workflow. Fortunately, Nelson Analytical allowed users to write their own routines, in compiled BASIC, and link the routines into the analysis software. Being young and fearless, I bought a book on BASIC programming and got to work. After a couple of weeks, I had a working program that would make injections, switch columns on the switching valve, analyze the chromatograms and calculate capacity factors and theoretical plate counts, key metrics for quality control of the HPLC columns. It took 5-10 minutes to compile and link the BASIC program, but I thought it was great.

To me, this was magic, I was able to program the computer (an IBM PC-XT, with 256K memory and a 10MB hard drive) and do useful things. I was hooked. I started writing more software to organize the results we were generating in the lab. I discovered the wonders of spreadsheets with Lotus 123, word processing with WordStar, and databases with Borland's Reflex (and later Paradox). I started recording all of the results were generating in a database, which was great. It was so much easier than digging through my lab notebook for data. One day through a chance encounter with a friend of a coworker, I met a guy who was a programmer. We started talking and I proudly told him about the BASIC programming I had been doing. He kind of chuckled and told me that I should check out Turbo Pascal. I went to the small computer section in the back of Barnes & Noble (remember bookstores) and bought a copy of Turbo Pascal (fit on one floppy disc) for $50. Turbo Pascal was probably the first integrated development environment, it had an editor (which used WordStar commands), and a compiler (later versions also had a debugger). I quickly became a Turbo Pascal convert. The syntax was so much cleaner than what I had been dealing with BASIC. No line numbers, no GOSUB, no GOTO, it was like someone had removed the handcuffs. Even better, Turbo Pascal programs compiled almost instantly. How could I possibly ask for more?

To me, this was magic, I was able to program the computer (an IBM PC-XT, with 256K memory and a 10MB hard drive) and do useful things. I was hooked. I started writing more software to organize the results we were generating in the lab. I discovered the wonders of spreadsheets with Lotus 123, word processing with WordStar, and databases with Borland's Reflex (and later Paradox). I started recording all of the results were generating in a database, which was great. It was so much easier than digging through my lab notebook for data. One day through a chance encounter with a friend of a coworker, I met a guy who was a programmer. We started talking and I proudly told him about the BASIC programming I had been doing. He kind of chuckled and told me that I should check out Turbo Pascal. I went to the small computer section in the back of Barnes & Noble (remember bookstores) and bought a copy of Turbo Pascal (fit on one floppy disc) for $50. Turbo Pascal was probably the first integrated development environment, it had an editor (which used WordStar commands), and a compiler (later versions also had a debugger). I quickly became a Turbo Pascal convert. The syntax was so much cleaner than what I had been dealing with BASIC. No line numbers, no GOSUB, no GOTO, it was like someone had removed the handcuffs. Even better, Turbo Pascal programs compiled almost instantly. How could I possibly ask for more?Turbo Pascal also brought me my first exposure to Open Source software (although we didn't call it that). There were programming magazines which had articles that were accompanied by a floppy disc with software. A guy named Glen Ouchi started a group called the Turbo User Group (TUG). For a small fee, he would send a disc with source code for some cool utilities in Turbo Pascal. A the time I didn't know what Unix was, but I learned a lot of the utilities like awk and sed from the versions distributed on the TUG discs.

Over the next couple of years, I hired people to work in the lab and spent more and more time programming. During this period, I spent a lot of time hanging out in the computer section in the back of Barnes & Noble. In this section, they not only had computer books, but they also had software, and best of all, a couple of Macs that people could play on. One day in 1986, I walked in and saw a display that said "Turbo Prolog - the natural language of artificial intelligence". I had no idea what AI was, but it sure sounded cool. I ponied up $50 and bought the package, which included a book and a disc with a Prolog interpreter and development environment. I spend the next few months learning Prolog, starting with implementations of things like the Towers of Hanoi and moving on to programming expert systems. One of the products that we were making at Analytichem was a series of solid phase extraction columns for sample preparation. We had a "database" (mostly a bunch of word processing documents) of solid phase extraction (SPE) methods. We reformatted the database into an expert system that enabled users to select the most appropriate solid phase extraction method. The expert system caught on an was used by the technical support group and featured at several trade shows.

Over the next couple of years, I hired people to work in the lab and spent more and more time programming. During this period, I spent a lot of time hanging out in the computer section in the back of Barnes & Noble. In this section, they not only had computer books, but they also had software, and best of all, a couple of Macs that people could play on. One day in 1986, I walked in and saw a display that said "Turbo Prolog - the natural language of artificial intelligence". I had no idea what AI was, but it sure sounded cool. I ponied up $50 and bought the package, which included a book and a disc with a Prolog interpreter and development environment. I spend the next few months learning Prolog, starting with implementations of things like the Towers of Hanoi and moving on to programming expert systems. One of the products that we were making at Analytichem was a series of solid phase extraction columns for sample preparation. We had a "database" (mostly a bunch of word processing documents) of solid phase extraction (SPE) methods. We reformatted the database into an expert system that enabled users to select the most appropriate solid phase extraction method. The expert system caught on an was used by the technical support group and featured at several trade shows. Sometime around 1987, Analytichem was acquired by Varian instruments. One of the great parts of this for me was that Varian had all kinds of people who were doing cool things with computers. One of the people working at Varian was a guy name Joe Karnicky, who was developing expert systems. Joe got me remote access to computers at Varian in Walnut Creek and introduced me to the wonders of Programming in LISP. After a couple more years at Varian/Analytichem, I was convinced that my career directions were oriented toward computing and not continued work in the lab. I knew that there was a lot that I needed to learn and I started looking for the best place to do that.

Sometime around 1987, Analytichem was acquired by Varian instruments. One of the great parts of this for me was that Varian had all kinds of people who were doing cool things with computers. One of the people working at Varian was a guy name Joe Karnicky, who was developing expert systems. Joe got me remote access to computers at Varian in Walnut Creek and introduced me to the wonders of Programming in LISP. After a couple more years at Varian/Analytichem, I was convinced that my career directions were oriented toward computing and not continued work in the lab. I knew that there was a lot that I needed to learn and I started looking for the best place to do that.Fortunately, another chance encounter sent me down the right path. Prof. Mike Burke, an analytical chemist at the University of Arizona, was a consultant for Analytichem and would visit the company a few times a year. On one of his visits, I brought up my desire to focus my career on computing and asked his advice. Dr. Burke mentioned that the University of Arizona had a new faculty member, Dan Dolata, who was focusing on applications of artificial intelligence in Chemistry. A few weeks later, I drove to Tucson to visit the University of Arizona and meet my future graduate school advisor.

Phase II Arizona 1990-1995

A few months later, I started grad school. One of the first projects I worked on was using the MM2 force field to minimize molecules from the Cambridge Structure Database (CSD). In order to carry out these calculations, I had to convert chemical structures from the FDAT format supported by the CSD to an MM2 input file, where each line in the input file was referred to as a "card". One of the tricky bits of this conversion was that the MM2 input file required the specification of hybridization (sp2, sp3, etc), which wasn't in the FDAT file. This meant that I had to write some code to calculate the hybridization. Fortunately, Elaine Meng and Richard Lewis in Peter Kollman's group at UCSF had just released a paper describing a method for calculating hybridization from geometry. I sent Elaine and email and she kindly sent me her FORTRAN code. I recoded her method in C, integrated some appropriate input and output routines and I was in business.

A few months later, I started grad school. One of the first projects I worked on was using the MM2 force field to minimize molecules from the Cambridge Structure Database (CSD). In order to carry out these calculations, I had to convert chemical structures from the FDAT format supported by the CSD to an MM2 input file, where each line in the input file was referred to as a "card". One of the tricky bits of this conversion was that the MM2 input file required the specification of hybridization (sp2, sp3, etc), which wasn't in the FDAT file. This meant that I had to write some code to calculate the hybridization. Fortunately, Elaine Meng and Richard Lewis in Peter Kollman's group at UCSF had just released a paper describing a method for calculating hybridization from geometry. I sent Elaine and email and she kindly sent me her FORTRAN code. I recoded her method in C, integrated some appropriate input and output routines and I was in business.In the subsequent weeks, I added a few more input and output formats to fit the needs of projects I was working on. At this point, others in the lab started taking note of what I was doing and asked me to add other file formats. I decided that the program needed a name and borrowed the name Babel from one of my favorite books "The Hitchhikers Guide to the Galaxy" by Douglas Adams. I spent the holiday break polishing the code and Babel became a mainstay in our lab. In my second year of grad school, Matt Stahl, who was from Seattle and came to Arizona primarily to get away from the rain, joined our lab. Matt was a talented programmer and made some much-needed updates to the fundamental Babel infrastructure.

In 1992, we decided it was time to release Babel into the wild and sent out an announcement on the Computational Chemistry List (CCL), which was the primary mode of communication for the computational chemistry community at the time. The response was overwhelming, within a month we had hundreds of downloads and quite a few people sent requests for additional file formats. It was sometimes challenging to add file formats when we only had one or two examples, but we did our best. Babel was a great experience for both Matt and me, we met a lot of people and gained a lot of satisfaction out of seeing our code being widely used. Toward the end of our time in grad school, Matt started working on a C++ version of the libraries behind Babel, that he called OBabel. When Matt moved to OpenEye Scientific software, this library became OELib, which then morphed into the Open Babel project that still persists. Although I no longer have anything to do with the project, there are still a few hints of my code remaining.

In 1992, we decided it was time to release Babel into the wild and sent out an announcement on the Computational Chemistry List (CCL), which was the primary mode of communication for the computational chemistry community at the time. The response was overwhelming, within a month we had hundreds of downloads and quite a few people sent requests for additional file formats. It was sometimes challenging to add file formats when we only had one or two examples, but we did our best. Babel was a great experience for both Matt and me, we met a lot of people and gained a lot of satisfaction out of seeing our code being widely used. Toward the end of our time in grad school, Matt started working on a C++ version of the libraries behind Babel, that he called OBabel. When Matt moved to OpenEye Scientific software, this library became OELib, which then morphed into the Open Babel project that still persists. Although I no longer have anything to do with the project, there are still a few hints of my code remaining.Phase III Vertex 1995-2016

In 1995, I finished grad school and went to work at Vertex Pharmaceuticals in Cambridge, MA. A lot of my work involved continued work on a conformer generation program called Wizard 2 that Matt Stahl and I had developed in grad school. Wizard began its life as a program written by our advisor Dan Dolata, during a post-doc at Oxford University. Wizard worked by combining fragments (rings, alkyl chains, etc) to generate conformations of molecules. The logic defining the depth-first search that Wizard used was written in Prolog and the bits that manipulated geometry were written in Fortran. This sounds elegant, but in practice it was painful. We only had one machine (a VAX) with a Prolog interpreter and the Prolog/Fortran interface was pretty brittle.After trying a number of approaches, Matt and I finally decided to rewrite the entire program in C. We used the libraries that formed the basis of Babel as the foundation and wrote a lot of additional code to handle geometry, pattern matching, etc. We coded a variety of search strategies including the original depth-first search, an A* search, a genetic algorithm, and simulated annealing. After I had been at Vertex for about a year, Matt joined us from a post-doc that he had been doing in the Corey group at Harvard. Matt set to work rewriting Wizard in C++ using the OBabel code he had been developing. After some time at Vertex, Matt decided to move to OpenEye Scientific Software and continued to evolve conformational searching programs. The end result of Matt's work was the industry-leading program Omega, which I and hundreds of others continue to use. I have to say that the 6-7 years I spent collaborating with Matt were some of most fun and productive of my professional career.

During my time in grad school and early in my career at Vertex, I had been programming in Perl. The language had a somewhat concise syntax and rich capabilities for dealing with text. Like most people who worked with data, I spent a lot of time munging and converting data files with Perl. I had even written a rudimentary cheminformatics toolkit with Perl, it wasn't fast, but you could do a lot with a little bit of code. About a year into my time at Vertex, I heard about a Pearl wrapper around the Daylight toolkit that had been written by Alex Wong at Chiron. For those who may not remember, the Daylight toolkits were the first Chemiformatics toolkits to enable SMILES processing and SMARTS matching. Daylight had CLogP, SMIRKS for processing reactions and lots more. The ability to access all of this functionality from Perl was incredibly powerful, in a few lines of code I could easily manipulate large chemical databases.

During my time in grad school and early in my career at Vertex, I had been programming in Perl. The language had a somewhat concise syntax and rich capabilities for dealing with text. Like most people who worked with data, I spent a lot of time munging and converting data files with Perl. I had even written a rudimentary cheminformatics toolkit with Perl, it wasn't fast, but you could do a lot with a little bit of code. About a year into my time at Vertex, I heard about a Pearl wrapper around the Daylight toolkit that had been written by Alex Wong at Chiron. For those who may not remember, the Daylight toolkits were the first Chemiformatics toolkits to enable SMILES processing and SMARTS matching. Daylight had CLogP, SMIRKS for processing reactions and lots more. The ability to access all of this functionality from Perl was incredibly powerful, in a few lines of code I could easily manipulate large chemical databases.In 1997, Matt Stahl and I attended the first Summer School, put on by Daylight Chemical Information Systems. This was a one week, in-depth introduction to the Daylight toolkits. While there I met Andrew Dalke, who was working at a Sante Fe informatics company called BioReason. Andrew had developed a Python interface around the Daylight toolkits. At the time, I didn't have a lot of experience with Python, but Andrew's implementation had the advatage that it automatically took care of memory management. For those who don't remember life before automatic garbage collection, we used to have to manually allocate and deallocate memory (yes, we also walked six miles uphill to school in the snow). Since the Daylight toolkits were written in C, they required calls to dt_alloc and dt_dealloc to allocate and deallocate memory. I would invariably forget to call dt_dealloc, create a memory leak and crash my program.

Andrew's implementation of PyDaylight was amazing. It took care of all of the memory management for you. In addition, the syntax of Python was much cleaner and object-oriented programming was elegantly implemented. Object-oriented programming in Perl, on the other hand, was a horrible hack involving syntax like "MyClass->@{}" or something to that effect. It's one of those things I've tried to forget. Around the same time that Andrew was working on PyDaylight, two different people were working on Python wrappers for OELib the C++ library that evolved from Babel. Bob Tolbert, who was working at Boehringer Ingelheim in Connecticut and Neal Taylor who was working at BASF in Worcester, MA were working independently on Python wrappers for OELib. Neal was in the process of moving back to Australia to start Desert Scientific Software so I traded him an old Silicon Graphics workstation for his code. Bob also sent me his version, which eventually evolved into the Python wrappers for the Openeye Toolkits. By 1998, I was a fully committed Python programmer. I rewrote most of the tools I'd written in Perl and used Python and the OpenEye tools as the basis for most of the Cheminformatics work I was doing.

Andrew's implementation of PyDaylight was amazing. It took care of all of the memory management for you. In addition, the syntax of Python was much cleaner and object-oriented programming was elegantly implemented. Object-oriented programming in Perl, on the other hand, was a horrible hack involving syntax like "MyClass->@{}" or something to that effect. It's one of those things I've tried to forget. Around the same time that Andrew was working on PyDaylight, two different people were working on Python wrappers for OELib the C++ library that evolved from Babel. Bob Tolbert, who was working at Boehringer Ingelheim in Connecticut and Neal Taylor who was working at BASF in Worcester, MA were working independently on Python wrappers for OELib. Neal was in the process of moving back to Australia to start Desert Scientific Software so I traded him an old Silicon Graphics workstation for his code. Bob also sent me his version, which eventually evolved into the Python wrappers for the Openeye Toolkits. By 1998, I was a fully committed Python programmer. I rewrote most of the tools I'd written in Perl and used Python and the OpenEye tools as the basis for most of the Cheminformatics work I was doing. Around 1999, I had been working at Vertex for 4 years and no one in the company was happy with the commercial product that we were using to store and search our chemical and biological data. The interface was clunky and the searches were very slow. Daylight had just introduced a database cartridge that allowed users to use SQL queries to simultaneously search chemical structures and biological data with an SQL query. I had been using SQL databases for a while, so we started discussing the prospect of building a full-fledged chemical database system. Fortunately, around the same time, Trevor Kramer, a talented programmer who also understood Chemistry and Biology started work at Vertex. At the time, Trevor was primarily a Java programmer so he worked on the interface, while I worked on the database backend. In the first version of VERDI, the Vertex Research Database Interface, the interface was written in Java, while the database engine was written in Python. The exchange between the two portions of the program was through XML files. This was a good first solution, but debugging and optimization were difficult.

Around 1999, I had been working at Vertex for 4 years and no one in the company was happy with the commercial product that we were using to store and search our chemical and biological data. The interface was clunky and the searches were very slow. Daylight had just introduced a database cartridge that allowed users to use SQL queries to simultaneously search chemical structures and biological data with an SQL query. I had been using SQL databases for a while, so we started discussing the prospect of building a full-fledged chemical database system. Fortunately, around the same time, Trevor Kramer, a talented programmer who also understood Chemistry and Biology started work at Vertex. At the time, Trevor was primarily a Java programmer so he worked on the interface, while I worked on the database backend. In the first version of VERDI, the Vertex Research Database Interface, the interface was written in Java, while the database engine was written in Python. The exchange between the two portions of the program was through XML files. This was a good first solution, but debugging and optimization were difficult. When we wrote the second version of VERDI, I still wasn't a great Java programmer, so I wrote the database engine in Jython, which was then compiled into Java bytecode in a jar file. This solution was a little better and made the integration process easier, but still made it difficult to debug interactions between the interface and the database engine. Ultimately we ended up with a solution that was written entirely in Java. However, the earlier Python version allowed us to rapidly prototype and get the execution right. Subsequent Jython and Java versions ended up following the workflow developed with the original Python version. Over the next few years, I wrote more and more Java code and became a proficient Java programmer. After years working at the command line, it was great to be able to create programs with interfaces. It was also fun working in a language with object-oriented programming at its core. The downside was that it took a lot more code to actually do anything.

When we wrote the second version of VERDI, I still wasn't a great Java programmer, so I wrote the database engine in Jython, which was then compiled into Java bytecode in a jar file. This solution was a little better and made the integration process easier, but still made it difficult to debug interactions between the interface and the database engine. Ultimately we ended up with a solution that was written entirely in Java. However, the earlier Python version allowed us to rapidly prototype and get the execution right. Subsequent Jython and Java versions ended up following the workflow developed with the original Python version. Over the next few years, I wrote more and more Java code and became a proficient Java programmer. After years working at the command line, it was great to be able to create programs with interfaces. It was also fun working in a language with object-oriented programming at its core. The downside was that it took a lot more code to actually do anything. In 2003 the group at Merck released their paper on using Random Forest to build classification and regression models. At the same time, our group at Vertex was moving from purely structure-based projects to projects on membrane targets where we didn't have structures. As part of this effort, we decided to get serious about building QSAR models and started working on an infrastructure that we called LEAPS (Ligand Evaluation in the Absence of Protein Structure). I had spent time working with decision tree methods in grad school (does anyone remember C4.5 and ID3?) so I found Random Forest appealing. Fortunately, the Merck group had released the code and some of the data associated with their paper. Unfortunately, the code was written in R and I knew next to nothing about R.

In 2003 the group at Merck released their paper on using Random Forest to build classification and regression models. At the same time, our group at Vertex was moving from purely structure-based projects to projects on membrane targets where we didn't have structures. As part of this effort, we decided to get serious about building QSAR models and started working on an infrastructure that we called LEAPS (Ligand Evaluation in the Absence of Protein Structure). I had spent time working with decision tree methods in grad school (does anyone remember C4.5 and ID3?) so I found Random Forest appealing. Fortunately, the Merck group had released the code and some of the data associated with their paper. Unfortunately, the code was written in R and I knew next to nothing about R.Undeterred, I bought a book on R (there were only a couple available at the time) and dove in. The learning curve was a little steep, but once I got into it I realized how it made my life easier. Almost every machine learning and statistical method I could think of was already there. In addition, the ability to manipulate data frames made it very easy to manipulate and plot data. We ended up building an infrastructure which did most of the chemical structure manipulation in Python, using the OpenEye toolkits, and most of the statistics and machine learning in R. The two languages were merged using a package called RPy which allowed us to call R functions from within Python. RPy was great, but any time we updated a version of Python or R, everything broke and we would spend a day tearing our hair out and searching the internet to find a way to fix it.

The other great thing about R was and still is, its ability to generate plots. The internet is filled with galleries of amazing plots that people have generated with R. The ggplot plotting library in R is almost a language in itself. Once you get over the learning curve and wrap your mind around what Hadley Wickham has created, there is so much that you can do. The recent improvements in the Python data stack have reduced the amount of work that I do with R, but I still come back to ggplot when I want very precise control over what I'm plotting.

Phase IV Relay 2016-Present

At the beginning of 2016, I made the difficult decision to leave Vertex and join a new start-up, Relay Therapeutics, that focused on novel ways to use protein motion in drug discovery. In my 20 years at Vertex, I had heard countless stories of the early days (I came in during year 5) and felt that I wanted the experience of building a company from the ground up. Since I was starting a new computational group, I was back to square one with no existing code base. The one decision I made going in was that all of our Python code would be written in Python 3, a decision I'm very happy about as I see most of my favorite libraries sunsetting their support for Python 2.7.

I can't say that a lot has changed in my programming practice over the last few years. Most of the code I write is in Python. As I've mentioned in previous blog posts, I'm a huge fan of Pandas and tend to use it in most of what I write. Over the last year or so, I've been getting a lot out of the RDKit and DeepChem, as well as a bit of coding with TensorFlow and Keras. The best part of this is that I've been able to build a great group that teaches me something new every day.

This work is licensed under a Creative Commons Attribution 4.0 International License.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Comments

Post a Comment